Master Thesis Topics

Search for so far unmeasured decays at Belle II

Semileptonic B meson decays are decays of a B meson into a lepton, a neutrino, and most likely a charmed meson. Although these decays are known for decades there are still open questions concerning these decays. One of those questions is the so-called "gap-problem". The problem is a discrepancy between the inclusive measurement, where the charmed meson is not reconstructed, and exclusive measurements, where the charmed meson is fully reconstructed. The measured exclusive branching fractions do not sum up to the measured inclusive branching fraction. A possible solution to this problem is the existence of so far unmeasured decay channels of either the B meson or the involved charmed mesons. One candidate for those is the decay of a heavy charmed meson (here called D**) into an eta and either a D or D* meson (D** → D* eta). Those decays have not been measured so far. With Belle II data one can search for the decay B → D* eta l nu which may solve the gap-problem.

Search for B decays with baryon number violation by two units

A precondition for the generation of a baryon asymmetry in our universe is baryon number violation. While the violation of baryon number by one unit (∆B = 1) involving quarks of the first or second generation is severely constrained by the non-observation of proton decay, limits on baryon number violating processes involving third generation quarks are considerably weaker. Processes violating baryon number by two units are even less studied. With Belle II data we can search for ∆B = 2 processes, such as B meson decays to a deuteron and light mesons or leptons.

Determination of B meson quantum numbers

It is well known and often assumed that, according to the quark model, the ground state B mesons are pseudoscalar mesons. Interestingly, the spin and parity of the B mesons were never explicitly measured. With an angular analysis the quantum numbers of B mesons should be measured at Belle II.

Reconstruction of neutrons and search for B -> K n nbar (topic not available any more)

Neutrons are rarely produced in particle decays and are often not considered in the detector design and reconstuction software of high energy physics experiments. Nevertheless they can play an important role. For example the B -> K n nbar decay is a background in the measurement of the B -> K nu nubar decay, but has not been experimentally measured so far. The neutron reconstruction at Belle II and the sensitivity to the B -> K nu nubar decay should be studied.

Measurement of the branching fraction of B0 -> D(*)- K+

The theoretical calculation of decays like B0 -> D(*)- K+ requires a good understanding of hadronic effects. Recent calculations have achieved a high precision and show astonishing deviations from the measured values. In this project, a measurement of the branching fractions of B0 -> D- K+ and B0 -> D*- K+ should be performed for the first time with data of the Belle II experiment. An independent result is an important step towards a solution of the puzzle.

Measurement of the tau electric dipole moment

Electric dipole moments (EDMs) are a sensitive probe of CP violation. Tight constraints exist in particular for processes with first generation quark level transitions. The task of this topic is to measure the EDM of the tau lepton at Belle II. With a much larger dataset and an improved understanding of the detector we expect to significantly improve current constraints.

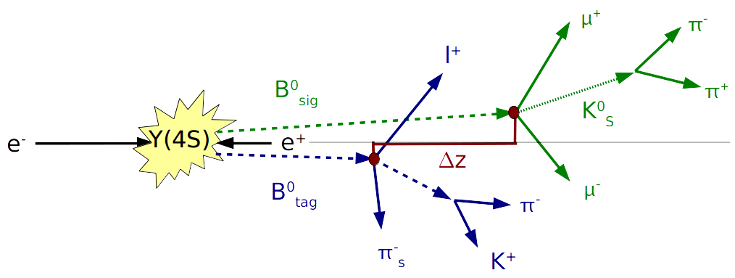

Development of a new B meson tagging method

A key feature of B factories is that they produce clean events of B meson pairs in reactions e+e- -> Y(4S) -> B anti-B. This feature is exploited by many analyses, e.g. for the reconstruction of B meson decays to invisible particles. In these analyses the second B meson is usually reconstructed in a hadronic or semileptonic decay, called tagging. The aim of this topic is the development of a new tagging algorithm that applies machine learning techniques to determine for each final state particle to which of the two B mesons it belongs. As a first step the general potential and applicability to selected analyses should be studied.

On-the-fly intelligent background simulation with distributed model training (topic not available any more)

Searches for rare decays require a good understanding of backgrounds. Often huge amounts of costly produced simulated data are used for this purpose. One aspect that makes this very inefficient is that events which are not selected as signal candidates still run through the full simulation chain because the selection quantities are only known afterwards.One approach to improve this at the Belle II experiment involves predicting with a machine learning model which events fulfill the selection criteria before the expensive detector simulation and event reconstruction are performed. However, to train such a model, a large enough labeled dataset has to be produced with the inefficient process beforehand which is especially problematic for cases with low selection efficiencies. It was shown that one can train the model on-the-fly while producing the data with increasing efficiency. To not be limited by the resources available on one machine a distributed model training should be investigated.

Optimization of physics software with AI methods

In recent years new developments in artificial intelligence had a large impact on many areas, including software development. Large language models show impressive skills in the analysis and generation of code. How well this applies to the domain specific code of the Belle II experiment should be investigated, in terms of software quality, resource efficiency, and physics performance. Knowledge of C++ is required for this project.

Belle II detector with augmented reality

A 3D printed model of the Belle II detector was created to explain to the general public how it works. To further improve the user experience and help understanding the detector concept an augmented reality application for mobile devices should be developed that shows simulated events and provides additional explanations. Web programming skills are required, experience with WebAR would be beneficial.

Neural network for the classification of Y(4S) events (topic not available any more)

B factory experiments such as Belle II are well suited to measure branching fractions of B mesons because the number of Y(4S) -> B Bbar events can be determined well. However, the fractions of Y(4S) decays to pairs of neutral and charged B mesons are needed as well and their ratio may depend on the center of mass energy. For a measurement of this energy dependency a multivariate classifier should be developed that can distinguish between pairs of neutral and charged B mesons.

Measurement of the eta reconstruction efficiency at Belle II (topic not available any more)

Eta mesons are used in many analysis at the Belle II experiment. Therefore one needs to understand very well how good these are reconstructed. The purpose of this task is to study the reconstruction of eta mesons using D meson decays. For this, D mesons are reconstructed from the decay D*+ -> D0 pi+, where the subsequent decay of the D0 meson can either be D0 -> K+pi- or D0 -> K+pi-eta (among others). By comparing these two decay channels one can deduce the reconstruction efficiency of the eta meson. The big advantage of this method is that one can directly compare data and simulation and use it to study potential data - simulation differences.

Measurement of energy dependence of strong isospin violation in Y(4S) decays (topic not available any more)

Measurements of branching fractions of B mesons are very important. On the one hand they can be compared with theoretical predictions and thus test the standard model of particle physics. On the other hand a precise knowledge of branching fractions is important for a good understanding of the composition of data for which often simulations are used. B factory experiments are well suited for the measurement of B meson branching fractions because the number of e+e- -> Y(4S) -> B anti-B events can be determined well. However, the probability of Y(4S) decays to pairs of neutral or charged B meson, called f00 and f±, has to be known. In case of strong isospin symmetry both rates would be equal. Recent theoretical suggest an isospin violation that depends on the center of mass energy. This should be checked with Belle II data.

Test of lepton universality with inclusive tagging (topic not available any more)

The world averages of R(D(*)) = BR(B -> D(*) tau nu) / BR(B -> D(*) l nu) deviate significantly from the standard model prediction. To check if lepton universality is violated or not further measurements are needed. A new analysis method uses inclusive tagging. Here it is checked if after the reconstruction of the signal decay B -> D* l nu all remaining particles are compatible with originating from the second B meson in Y(4S) -> B anti-B events. This method has the advantage of a higher efficiency compared with an exclusive reconstruction of decays of the second B meson, but leads also to a higher background level.

Generic new physics search with machine learning (topic not available any more)

New physics can be established by measuring deviations from standard model predictions. Usually this approach is implemented for selected observables. New physics effects may be overlooked if they show up in observables that were not considered or only in correlations of observables. A detailed and more generic comparison of measurements and theoretical predictions may be achieved by machine learning algorithms. Besides the design of the machine learning model and challenge will be to decide if observed deviations should be attributed to new physics or imperfections in theory calculations or detector simulation. This topic is explorative and requires the combination of knowledge from multiple areas.

Broad search for matter antimatter asymmetries (topic not available any more)

How to explain the origin of this asymmetry between matter and anti-matter in our universe, which is an essential condition for forming galaxies, stars, planets, and finally life, is still an outstanding mystery. So far measurements of CP violation mainly focused on exclusive decays that are expected to show significant effects. In this explorative study we want to search for matter antimatter asymmetries in a broader and more inclusive way to be sensitive to effects that may have been overlooked so far. As a first step one has to understand intrinsic asymmetries in particle detection.

Simplified statistical models for faster interpretation in searches for new physics (topic not available any more)

High-energy physics research has generated vast amounts of experimental data, along with statistical models aimed at identifying signs of new physics beyond the Standard Model. Increasingly, these models are shared as open data, making it possible to test a wide range of theoretical frameworks. However, evaluating these models can be computationally demanding due to the complexity of statistical fits, which often involve numerous parameters. This makes the analysis slow and resource-intensive.

This project entails creating an approximate version of these statistical models that retains key properties while significantly reducing computational requirements. By simplifying the models, this project would allow faster exclusion of unlikely theories and help focus on the most promising ones. This more efficient scanning would increase the practical usability of experimental results, particularly for theories with many free parameters.

Track reconstruction at Belle II with ACTS

The codes for the reconstruction of trajectories of charged particles are usually developed specifically for each experiment. With A Common Tracking Software (ACTS) a library has become available that provides tracking algorithms that can be applied in multiple experiments. Besides the known advantages of shared code, ACTS may also help to address bottlenecks in the execution time of custom codes. The replacements of parts of the Belle II tracking code by ACTS should be studied.

Feasibility of an R(D*) measurement at FCC-ee (topic not available any more)

The particle physics community is currently discussing for future direction of the field. An electron positron Future Collider at CERN (FCC-ee) could produce a huge amount of Z bosons which offers many opportunities for flavor physics measurements. The feasibility of an R(D*) measurement at FCC-ee should be studied. This requires setting up the relevant software stacks.

Belle II chatbot (topic not available any more)

Large language models have a huge impact in many areas. In science they may provide a completely new access to domain specific knowledge. The aim of this project is to fine-tune a large language model on Belle II specific information. A main technical challenge will be to access and aggregate data from various systems.